Announcing Dimspector! Dimspector is a tool for developers that statically analyzes PyTorch code to infer the shapes of tensors and detect shape mismatches before you run your code.

Dimspector is still very much in progress, which means there are bits of Python it can't analyze yet and likely contains bugs. Despite this, we wanted to make a usable version now to get a better signal on how to prioritize those bits.

How does it work?

While analyzing dynamic languages like Python is difficult, we (perhaps naively?) were hopeful that an analysis built with heuristics that attempts to maximize true positive bug catches rather than soundness would be possible / useful (somewhat inspired by RacerD). It's still an open question of how much road this approach has left, but so far we're tentatively optimistic we'll be able to build Dimspector into something that is useful for developers.

Developers add shape annotations to parameters

Developers can use jaxtyping (which despite the name, provides general tensor shape annotations) to add shape annotations to function parameters:

from jaxtyping import Float

import torch

from torch import Tensor

def matmul(x: Float[Tensor, "batch m k"], y: Float[Tensor, "batch k n"]) -> Float[Tensor, "batch m n"]:

"""Matrix multiplication."""

return x @ y

Each "dimensional variable" can be symbolic (batch), concrete (16), or an expression that mixes the two (batch-1).

You can also annotate integer params as dimensional variables:

def create_tensor(size: int) -> Float[Tensor, "{size}"]:

return torch.ones(size)

Dimspector makes the assumption that signatures will have a singleton of each dimensional variable in a shape or as an integer param. This means it is invalid to write,

def process_with_reduced_dim(

x: Float[Tensor, "batch d-1"],

weights: Float[Tensor, "d-1 out"]

) -> Float[Tensor, "batch out"]:

result = x @ weights

return result

Instead, developers must write,

def process_with_reduced_dim(

x: Float[Tensor, "batch d"],

weights: Float[Tensor, "d out"]

) -> Float[Tensor, "batch out"]:

result = x @ weights

return result```python

Dimspector also makes the assumption that two symbolic dimensional variables are the same only if they have the same name. This simplifies the analysis heavily, allowing us to check equality of dimensional variable expressions via canonicalization and syntactic equality instead of using an SMT solver.

Dimspector infers shapes and dimensional variables

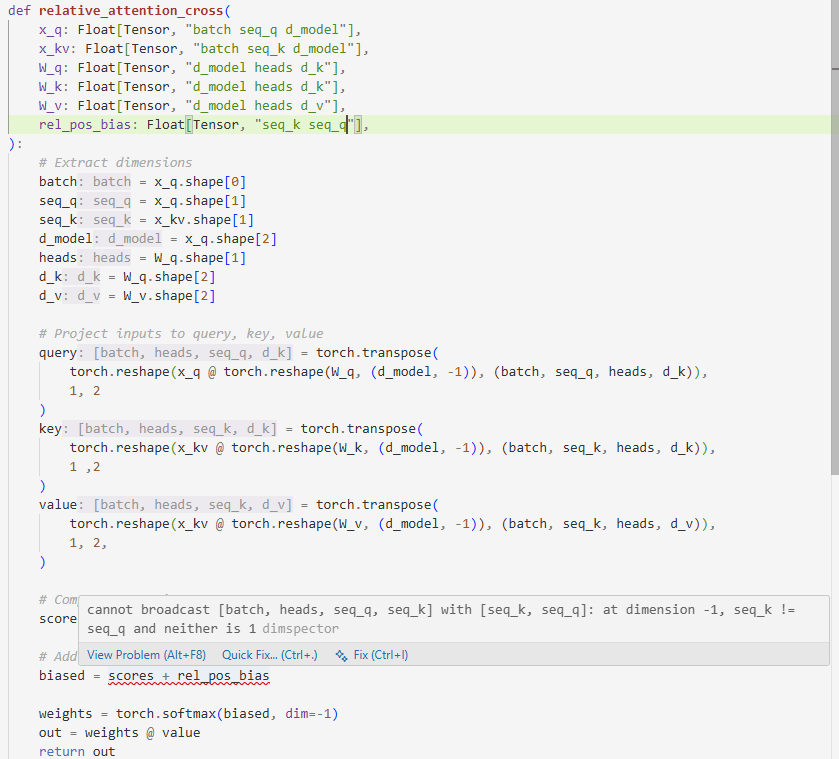

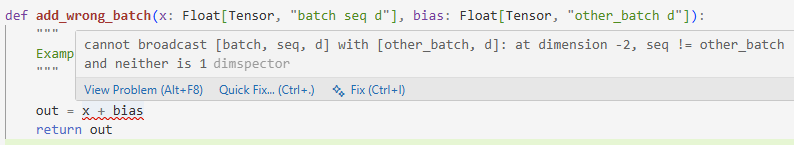

From these shape annotations, Dimspector can infer dimensional variables and shapes, which can (optionally) be used to provide inlay hints in your editor and to provide error diagnostics on shape mismatches:

The analysis that Dimspector runs is compositional, meaning it only needs to analyze each function/method once, leading to roughly linear time complexity in the size of projects. While there are still low-hanging engineering optimizations to make, we think Dimspector is quite fast, and aim to maintain this speed so that it can be used to provide per-edit feedback.